Types of Vector Stores and Their Use Cases

1. In-Memory Vector Stores

In order to further comprehend the role played by vector stores, it is pivotal to recognize that there are several types of vector stores with distinct features and purposes. One of the types of vector stores is the in-memory. This form of vector store best applies when speed and scalability within memory limits are needed as it eradicates the necessity for disk I/O, to which it results in lower latency during its operations.

At the same time, it offers the advantage of allowing efficient retrieval of vector embeddings during similarity searches. However, despite allowing ultra-fast access, it falls short in terms of having limited memory space to which scaling beyond the vacant RAM may lead to the need of distributed systems or hybrid solutions that utilizes in-memory and disk-based storage. As an in-memory vector store is designed to be volatile, it also means it has the potential risk of losing data should the system crash or restart. Although it has its limitations, technological solutions remain in place to address them such as periodic snapshots or replication to disk.

2. Disk-Based Vector Stores

Another form of vector stores would be disk-based for the purpose of scalability and cost-efficiency. In times where large datasets are in place, particularly for machine learning, semantic searches and much more. As high-dimensional data are at stake, the disk-based vector stores are able to efficiently conduct quick similarity searches by means of specialized indexing techniques despite having the vectors being stored on disk.

However, disks offer the advantage of having limitless capacity to manage massive scales of vectors. Unlike in-memory vector stores, the disk-based vector store safeguards data persistence when the system crashes or restarts, hence offering security over sensitive information.

For industries managing large scale datasets, the disk-based vector storage takes the spotlight in storing endless amounts of vector data at minimal expense. It should however be highlighted that a disk-based vector storage has slower access time in comparison to in-memory vector stores due to its capacity as well as complexity in index management and the need for persistence in distributed systems, it remains an efficient component for large-scaled applications to utilize.

3. Distributed Vector Stores

Aside from the in-memory and disk-based vector stores, industries with vast datasets are also able to utilize the distributed vector stores to optimize its features of fault tolerance, scalability as well as performance for largely scaled data operations. It is optimally designed to be replicated across multiple nodes as a safeguard to continuously operate in the event a machine or network malfunctions. With its implemented feature of having multiple nodes, it is able to ensure that no node is overloaded due to its load balancing mechanisms that are in place to consistently promote efficient query responses.

Furthermore, it distributes large datasets into smaller segments, also known as shards, for the purpose of better management of data to be stored into different nodes within its distributed system. This allows the system to have room for growth without the concern of searching through vast scales of data. As an insight to how this is achieved, the distributed vector stores operate by ingesting data to which it then distributes indexing techniques to search for similar vectors.

Lastly, once a similarity search is conducted, the system will proceed to comb multiple nodes for the relevant shards in a parallel manner which results in a speedier process at a cost-efficient rate. This is ideal for real-time applications such as recommendation systems or personalized AI solutions where the parameters of the query are large. Nonetheless, the feature of having multiple nodes requires storage as well as an efficient network in order to manage the communication between the nodes.

4. Specialized Vector Stores

Specialized vector stores on the other hand are typically used for industry-specific data. Unlike in-memory, disk-based or distributed vector stores, it is designed to manage niche application requirements dealing with vector embeddings that act as a tailored solution for handling specialized information. Industries that utilize this system range from healthcare, e-commerce and even natural language processing, to which all these respective industries share the same goal of managing large datasets. The specialized vector stores are constructed with specialized indexing structure to assist with efficient similarity searches of high-dimensional spaces which include Hierarchical Navigable Small World Graph (HNSW), Inverted File (IVF), and even Product Quantization (PQ).

As specialized vector stores are constructed to assist with industrial tasks, it is able to seamlessly integrate itself with existing machine learning models which are utilized to generate embeddings. It is essentially ideal for day-to-day tasks such as semantic searches, recommendation systems, image retrieval and even speech recognition to which these features are powered by advanced indexing algorithms for specific sector needs.

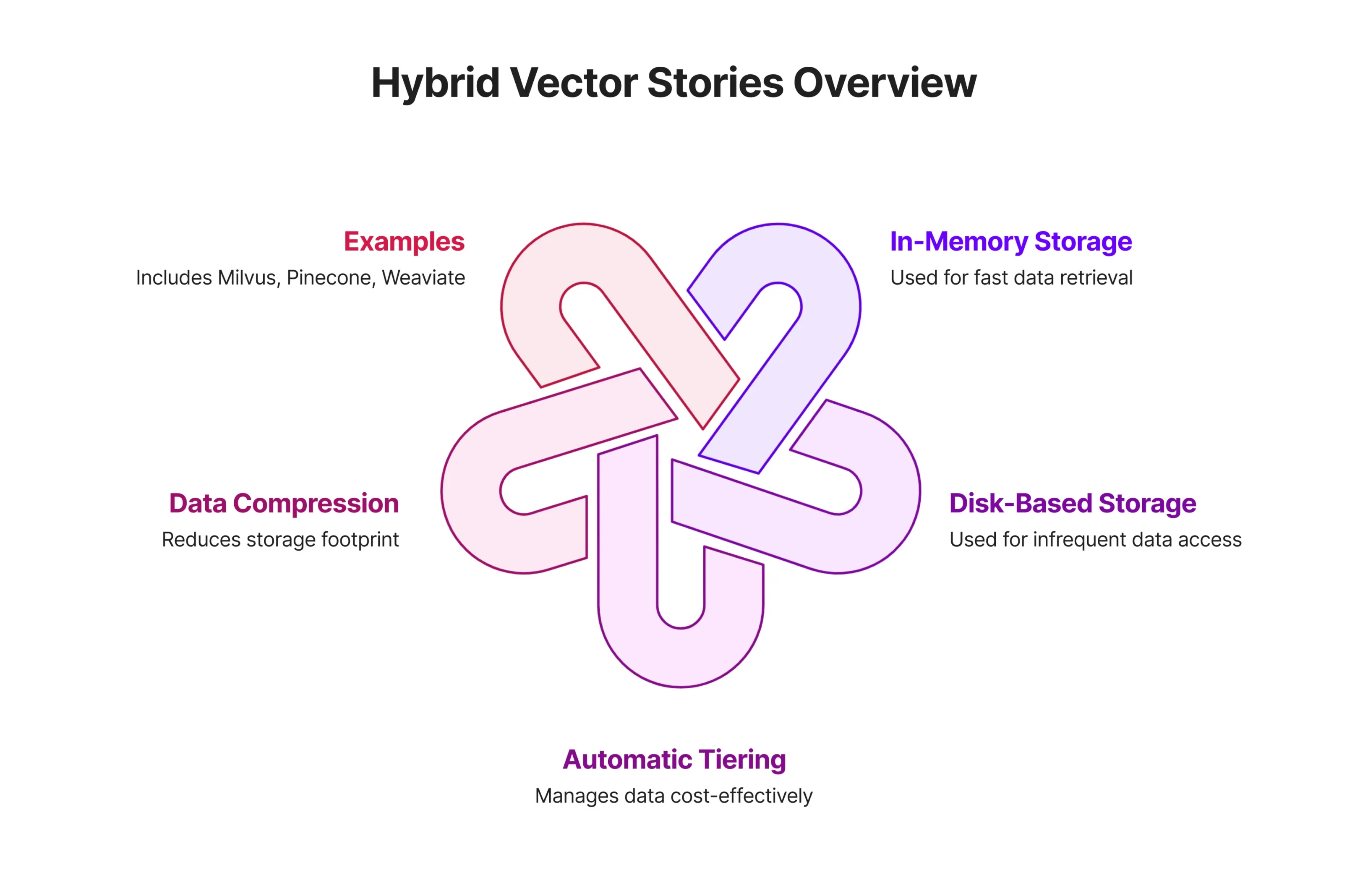

5. Hybrid Vector Stores

In light of the existing vector stores, the hybrid vector stores stands out as an efficient storage approach by means of integrating both the in-memory and disk-based storage architecture to manage vector data. The combination of these two components allow a seamless workflow of retrieval operations. For instance, in-memory storage is utilized for fast retrieval times, whereas the disk-based storage is used for infrequently accessed vectors.

These two vector stores work hand-in-hand with the automatic tiering mechanism to perform cost-efficient data management and retrieval whilst compressing data to reduce storage footprint. Examples of hybrid vector stores are Milvus, Pinecone, and Weaviate, to which these systems operate in ensuring low-latency performance for real-time AI applications. It goes without saying that due to the combination of two distinct vector stores, the implementation process of the hybrid integration is highly complex to the extent that additional system overhead may be introduced.